L’adoption d’applications d’intelligence artificielle (IA) ne cesse de progresser dans le monde. Dans le même temps, les capacités des solutions informatiques permettant l’IA évoluent rapidement. Une innovation sans précédent en découle.

Actuellement, le côté processeur (logique) attire toute l’attention des dirigeants d’entreprise et des investisseurs pour sa contribution à l’IA. Bien sûr, les processeurs sont essentiels pour l’IA et les calculs très performants. Mais la réussite de l'IA ne dépend pas uniquement de calculs et de performances ultrarapides. Il est tout aussi important de savoir que les applications d'IA reposent également sur le stockage des données, qui fournit un référentiel initial de données brutes, permet la création de points de contrôle qui renforcent la fiabilité des flux d'IA, et stocke les inférences et les résultats des analyses d'IA

Toute mise en œuvre d'IA efficace implique une synergie entre les ressources de calcul et celles de stockage des données.

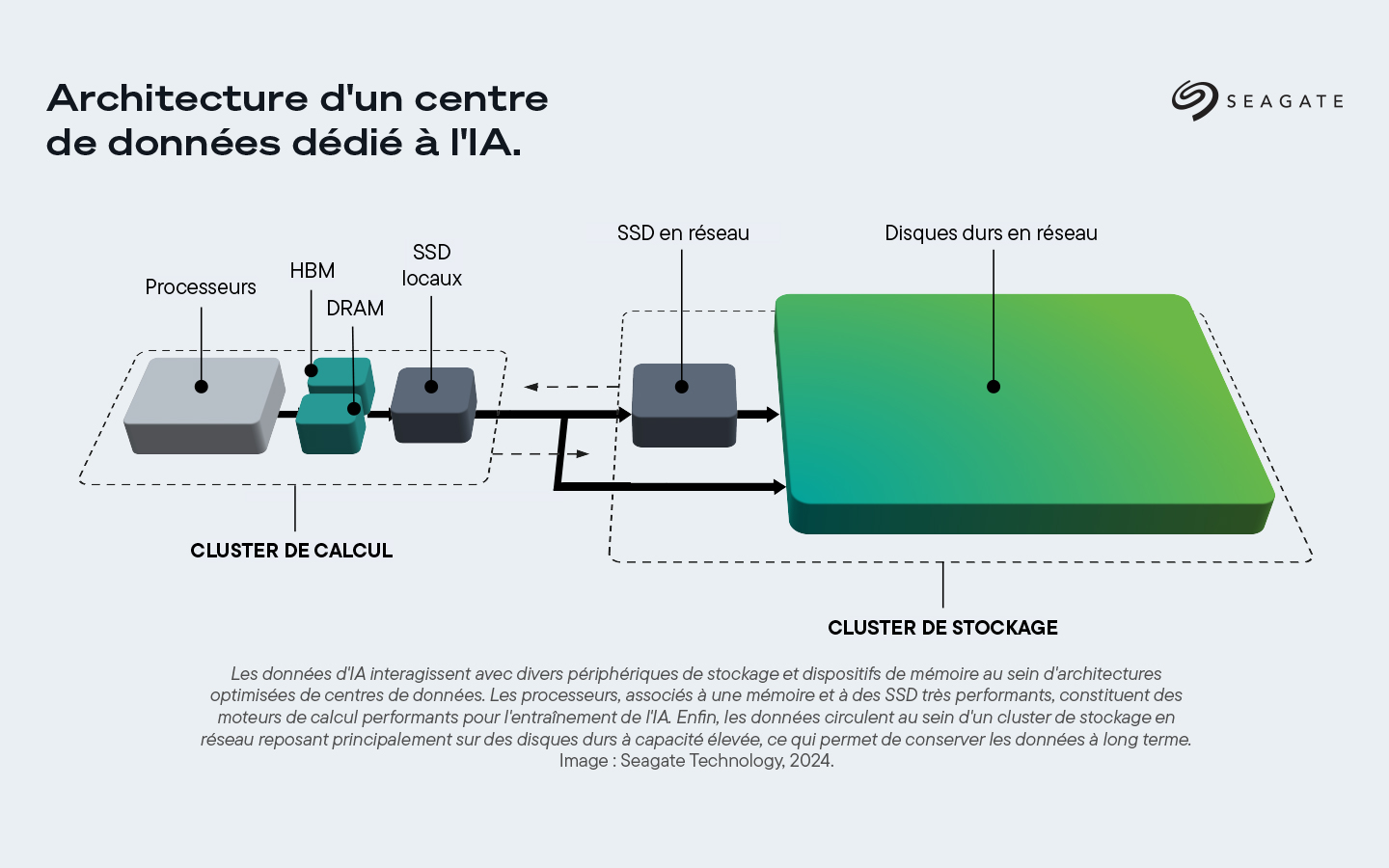

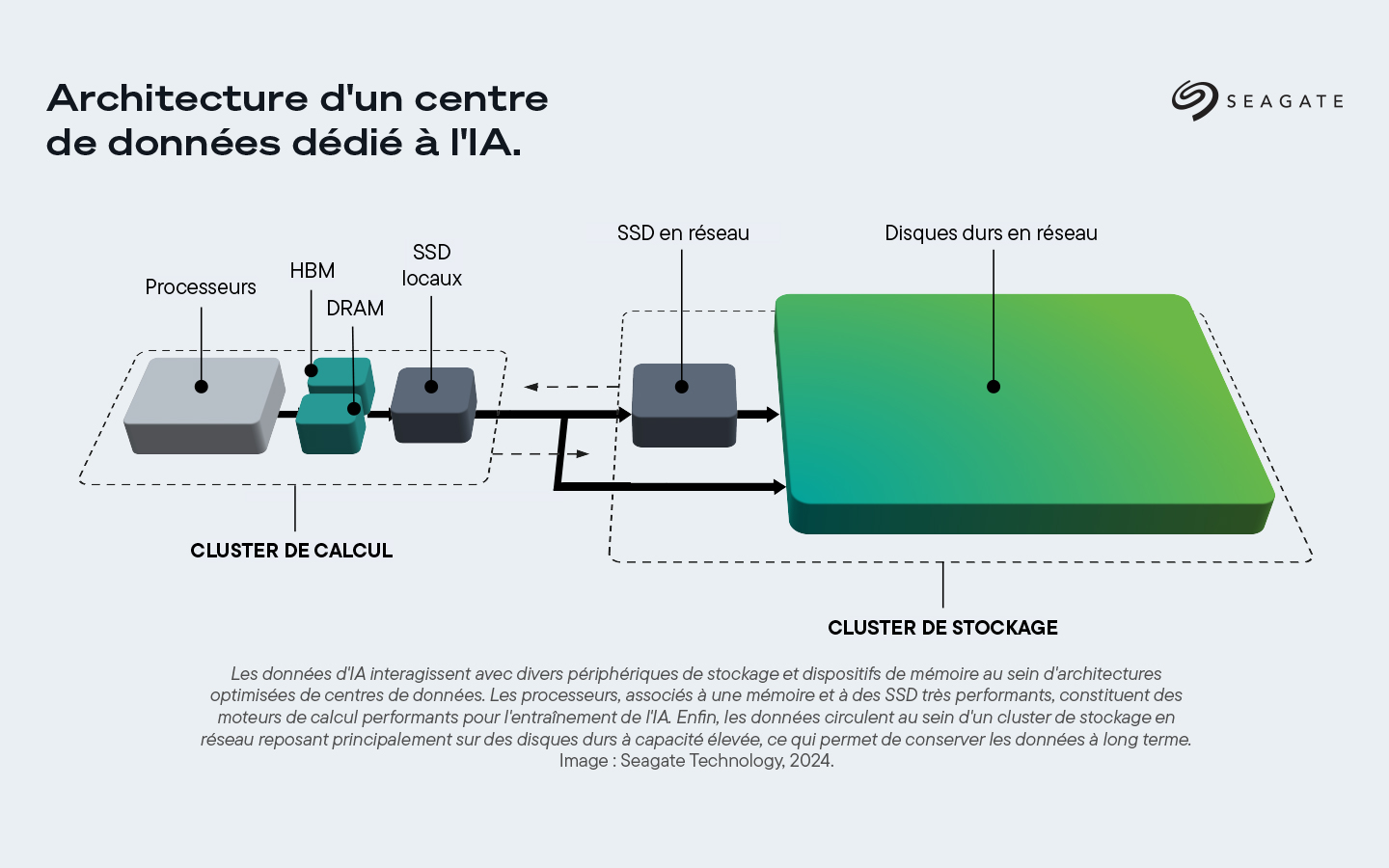

Lorsque les grands centres de données augmentent leurs capacités d'IA, il leur apparaît clairement que les applications d'IA ne reposent pas uniquement sur le côté calculs d'une architecture de centre de données d'IA. Le cluster de calcul comprend des processeurs très performants, une mémoire à large bande passante (HBM), une mémoire vive dynamique (DRAM) et des SSD locaux très rapides, qui constituent le moteur puissant de l'entraînement de l'IA. Les composants du cluster de calcul sont locaux et résident généralement les uns à côté des autres, car toute distance serait susceptible d'engendrer des problèmes de latence et de performances.

Les applications d'IA dépendent également du cluster de stockage, qui inclut des disques durs et des SSD en réseau à capacité élevée (devant être d'une capacité supérieure à celle des SSD locaux plus performants du cluster de calcul). Le cluster de stockage est en réseau (distribué), car la vitesse des performances de stockage est moins importante à grande échelle. La distance entre les composants est un facteur moindre dans l’équation de latence totale, par rapport à celle des clusters de calcul, où la latence attendue peut être de quelques nanosecondes. Les données sont ensuite transférées vers le cluster de stockage, composé principalement de disques durs aux capacités massives pour une conservation à long terme.

Cet article étudie comment les fonctions de calcul et de stockage se complètent au cours des différentes phases d’un flux d’IA type.

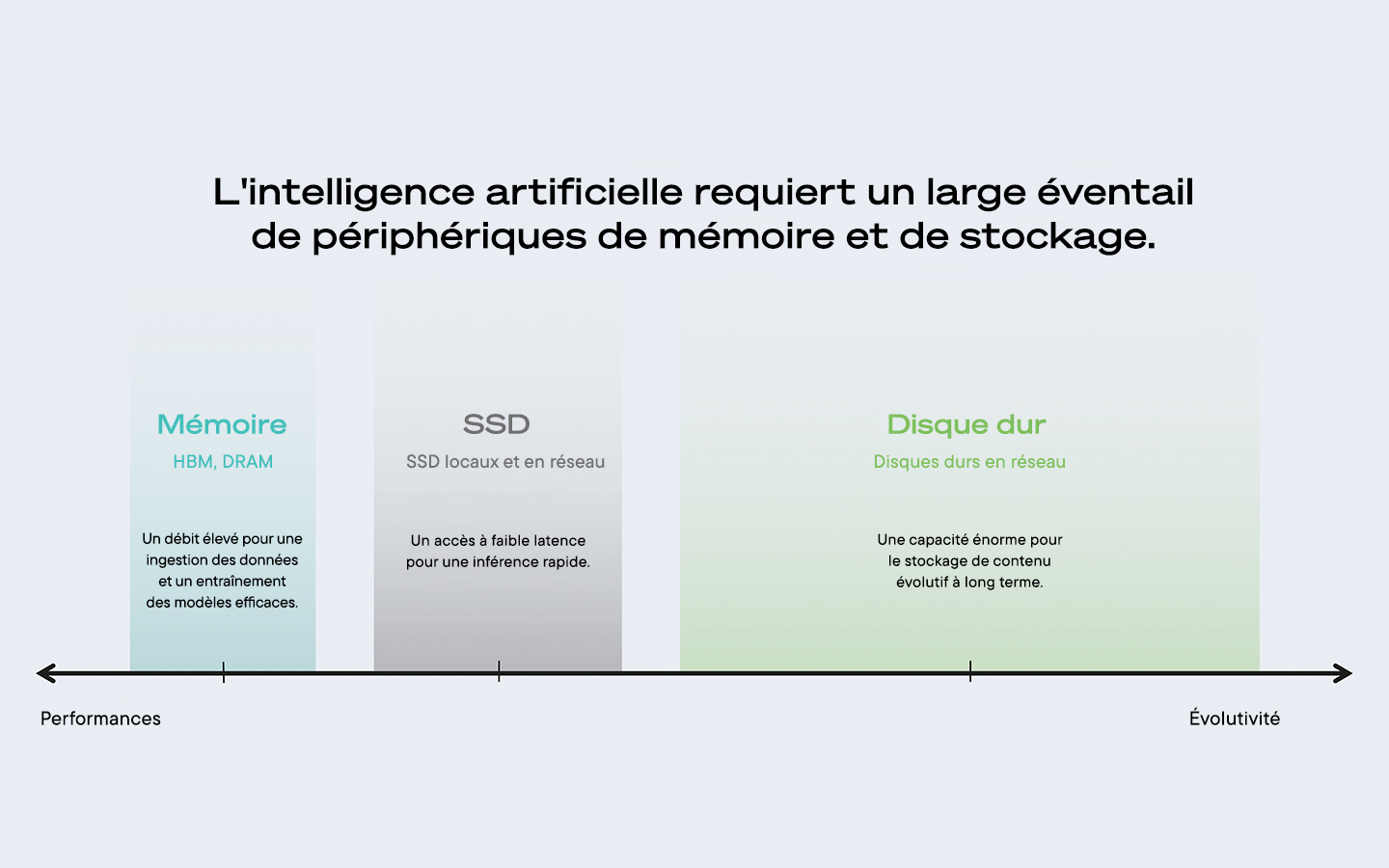

Performances et évolutivité pour l’IA.

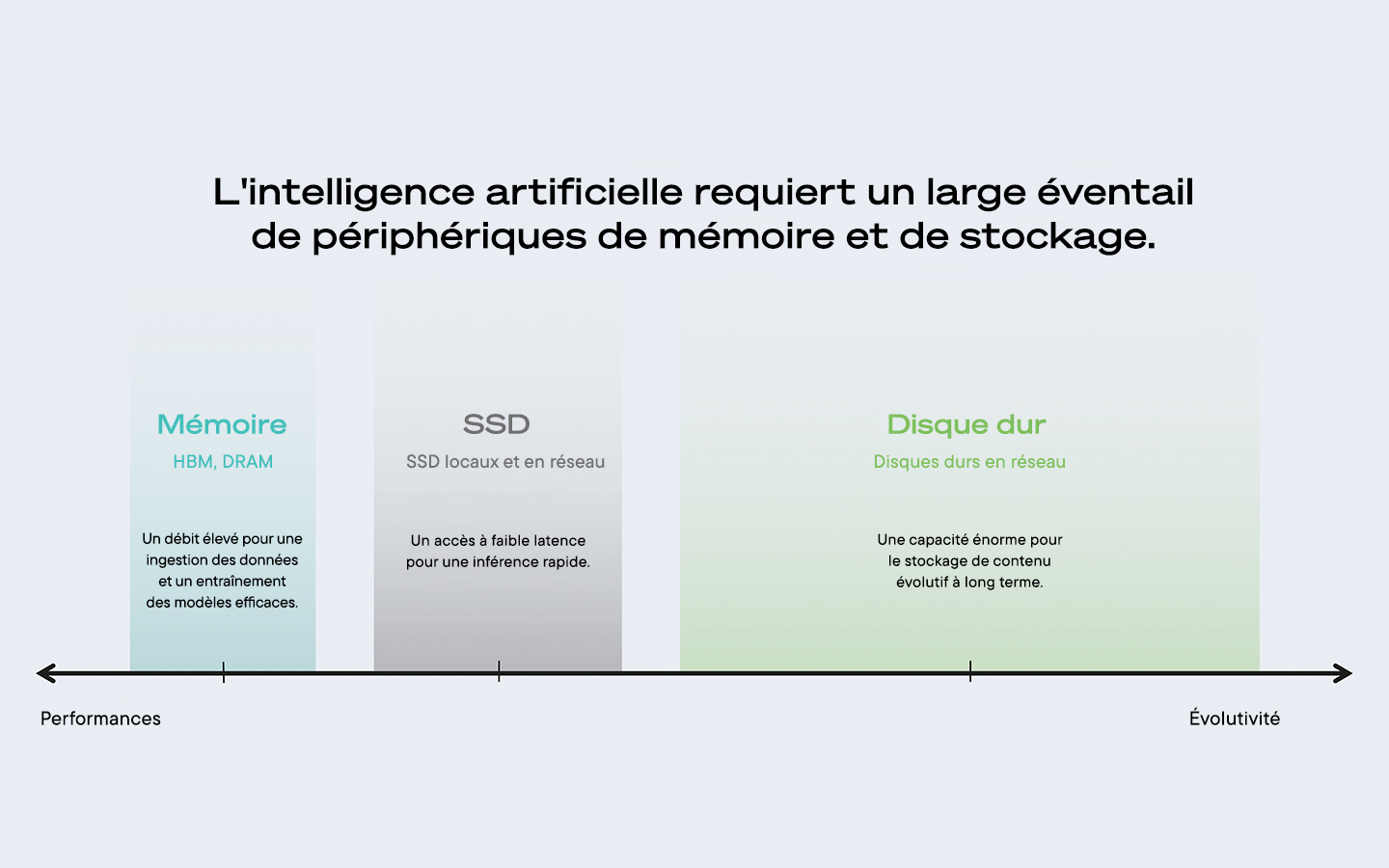

Certaines technologies des flux d’IA sont plus performantes et d’autres plus évolutives, mais chacune fait partie intégrante du processus. La mémoire des appareils est très performante et se compose généralement de mémoires HBM ou DRAM associées aux différents types de processeurs : processeurs graphiques (GPU), processeurs centraux (CPU) et unités de traitement de données (DPU). Les DPU sont des moteurs fonctionnels de transfert reliés aux processeurs. Ils complètent ces derniers pour certaines tâches spécifiques. Certaines architectures en utilisent, d’autres non. Le débit élevé de la mémoire permet une acquisition des données et un entraînement des modèles efficaces.

La faible latence et la capacité des SSD permettent une inférence rapide et un accès fréquent aux contenus stockés. Dans une architecture de centre de données d’IA, des SSD locaux très performants sont intégrés au cluster de calcul, à proximité des processeurs et de la mémoire. Les SSD locaux exécutent généralement une mémoire avec cellules à trois niveaux et offrent également une grande longévité, mais ils sont généralement plus chers que les SSD en réseau et n'offrent pas la même capacité.

Les SSD en réseau, qui disposent d’une capacité de stockage de données plus élevée que les SSD locaux, sont inclus dans le cluster de stockage, et ont d’autres responsabilités spécifiques tout au long du flux associé aux applications d’IA. La vitesse de ces disques n’égale pas celle des SSD locaux. En comparaison, les SSD en réseau offrent une longévité moindre en termes d’écritures sur disque par jour, mais ils compensent en offrant une capacité plus élevée.

Les disques durs en réseau, qui font également partie du cluster de stockage de l’architecture de centre de données d’IA, sont les périphériques informatiques les plus évolutifs et les plus efficaces pour les flux d’IA. Ils ont des vitesses d'accès plus faibles, mais des capacités très élevées, ce qui est idéal pour les instances ne nécessitant pas d'accès fréquents et rapides.

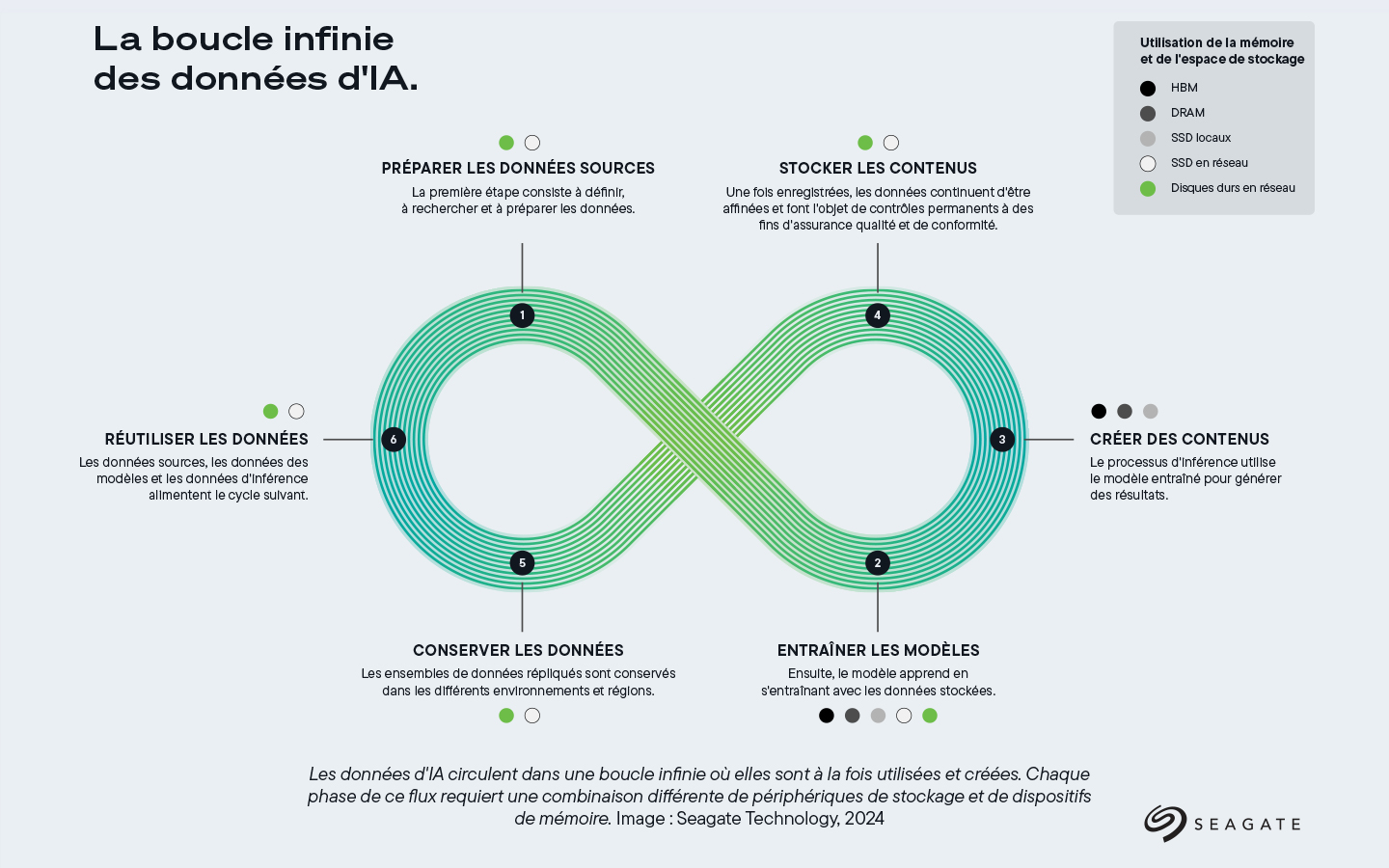

La boucle infinie de l’IA.

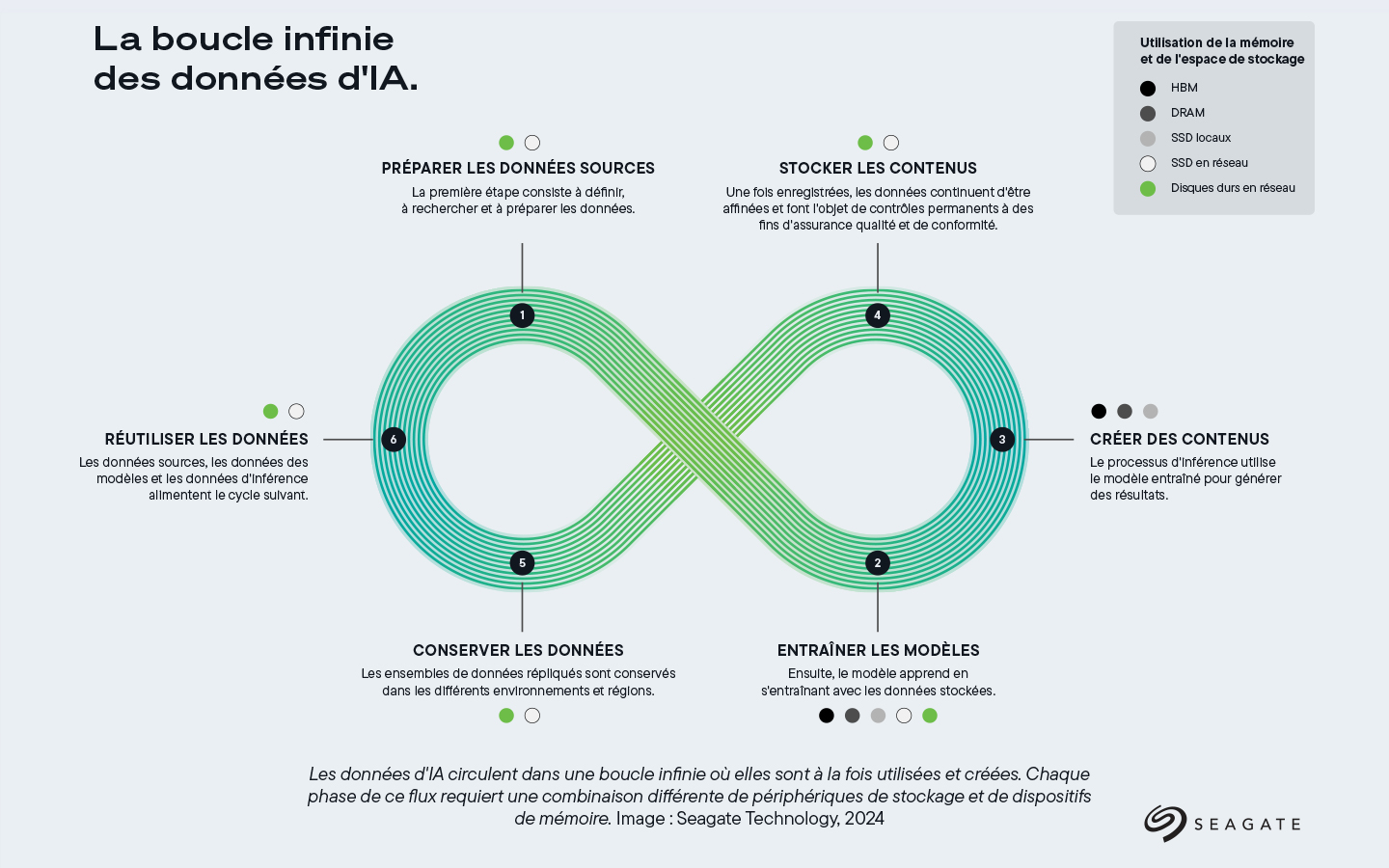

Les flux d’IA opèrent dans une boucle infinie de consommation et de création, qui requiert non seulement des mémoires et des processeurs pour le calcul, mais également des composants de stockage. Les étapes interdépendantes d’un flux d’IA comprennent l’approvisionnement en données, l’entraînement des modèles, la création de contenus, le stockage des contenus, la conservation des données et la réutilisation des données. Examinons les rôles que jouent le calcul et le stockage lors de ces différentes phases.

Étape 1 : approvisionnement en données.

La phase d'approvisionnement en données implique la définition, la découverte et la préparation des données pour l'analyse d'IA.

Calcul : Les processeurs graphiques jouent un rôle fondamental lors de la phase d’approvisionnement en données, en favorisant le prétraitement et la transformation des données à grande vitesse. Ils complètent les processeurs centraux en exécutant des calculs répétitifs en parallèle, tandis que l’application principale s’exécute sur le processeur central. Le processeur central joue le rôle d’unité principale, gérant plusieurs tâches de calcul à but général, tandis que le processeur graphique effectue un ensemble plus restreint de tâches plus spécialisées.

Stockage : Lors de la phase d'approvisionnement en données, les SSD en réseau et les disques durs en réseau permettent de stocker les énormes quantités de données nécessaires à la création de nouvelles données. Les SSD en réseau constituent un niveau de données immédiatement accessible et offrent ainsi des performances plus rapides. Les disques durs en réseau proposent une capacité étendue, dense et évolutive, ainsi qu’une conservation et une protection à long terme des données brutes.

Étape 2 : entraînement du modèle.

À l'étape d'entraînement du modèle, ce dernier apprend à partir des données stockées. L’entraînement est un processus expérimental au cours duquel un modèle converge et est protégé par des points de contrôle. Un accès ultrarapide aux données est alors indispensable.

Calcul : Les processeurs graphiques sont essentiels durant la phase d'entraînement du modèle, car leurs capacités de traitement en parallèle leur permettent de gérer les charges de calcul massives du deep learning. L’entraînement de l’IA implique des milliers de multiplications de matrices, gérées simultanément par les processeurs graphiques, ce qui accélère le processus et permet d’entraîner des modèles complexes comportant des milliards de paramètres. Les processeurs centraux fonctionnent parallèlement aux processeurs graphiques pour organiser le flux de données entre les ressources de mémoire et de calcul. Les processeurs centraux gèrent des tâches telles que la préparation des lots et la gestion des files d’attente, afin que les données appropriées soient envoyées aux processeurs graphiques en temps voulu. Ils se chargent également d’optimiser les hyperparamètres du modèle, en effectuant des calculs qui ne requièrent pas nécessairement la puissance de traitement parallèle des processeurs graphiques.

Pour l’entraînement du modèle, les mémoires HBM et DRAM sont essentielles pour un accès rapide aux données, car elles conservent les ensembles de données actifs à proximité des processeurs. La mémoire HBM, qui est généralement intégrée aux processeurs graphiques, augmente considérablement la vitesse à laquelle les données peuvent être traitées, en mettant les données les plus fréquemment utilisées à la disposition des processeurs graphiques pendant l’entraînement.

Les SSD locaux offrent un espace de stockage à accès rapide pour les ensembles de données utilisés lors de cette phase. Ils stockent les résultats intermédiaires de l’entraînement et permettent de récupérer rapidement de grands ensembles de données. Ils sont particulièrement utiles pour l’entraînement des modèles qui nécessitent un accès rapide à de grandes quantités de données, tels que les modèles de reconnaissance d’images impliquant des millions d’images.

Stockage : Les disques durs permettent de stocker à moindre coût les vastes quantités de données nécessaires à l'entraînement des modèles d'IA. En plus de fournir la capacité évolutive nécessaire, les disques durs contribuent à préserver l'intégrité des données, en stockant et en protégeant les versions répliquées des contenus créés. Les disques durs sont plus économiques que les autres options de stockage ; ils offrent un stockage à long terme fiable, ainsi qu'une conservation et une gestion efficaces des grands ensembles de données.

Entre autres choses, les disques durs en réseau et les SSD en réseau stockent des points de contrôle afin de protéger et d’affiner l’entraînement des modèles. Les points de contrôle sont des instantanés des états d’un modèle enregistrés à des moments précis de l’entraînement, de l’ajustement et de l’adaptation. Ces instantanés peuvent être consultés ultérieurement pour faire la preuve de la propriété intellectuelle ou montrer comment l’algorithme est parvenu à ses conclusions. Lorsque des SSD sont utilisés pour la création de points de contrôle, ces derniers sont écrits à intervalles réguliers (par exemple, toutes les minutes) en raison de leur accès à faible latence. Toutefois, ces données sont généralement remplacées après une courte durée, en raison de leur faible capacité par rapport aux disques durs. À l'inverse, les points de contrôle enregistrés sur disque dur sont généralement écrits selon un intervalle plus long, mais ils peuvent être conservés quasiment pour toujours en raison de la capacité évolutive des disques durs.

Étape 3 : création de contenus.

La phase de création de contenus implique le processus d’inférence, qui utilise le modèle entraîné pour produire des résultats.

Calcul : Lors de la création de contenus, les processeurs graphiques exécutent des tâches d’inférence d’IA, en appliquant le modèle entraîné à de nouvelles entrées de données. Ce parallélisme permet aux processeurs graphiques d’effectuer plusieurs inférences simultanément, ce qui les rend indispensables pour les applications en temps réel comme la génération de vidéos ou les systèmes d’IA conversationnels. Tandis que les processeurs graphiques sont principalement utilisés pour les tâches de calcul au cours de la création de contenus, les processeurs centraux sont essentiels pour la gestion de la logique de contrôle et l’exécution des opérations qui nécessitent un traitement en série. Ceci inclut la génération de scripts, la gestion des entrées des utilisateurs et l’exécution de tâches en arrière-plan de priorité moindre qui ne nécessitent pas le débit élevé d’un processeur graphique.

L'étape de création de contenus utilise les mémoires HBM et DRAM. La mémoire joue ici un rôle central dans l’accès aux données en temps réel, en stockant temporairement les résultats des inférences de l’IA et en les injectant à nouveau dans le modèle en vue de leur affinage. La mémoire DRAM à capacité élevée permet de réaliser plusieurs itérations de création de contenus sans ralentir le flux de travail, notamment dans des applications comme la génération de vidéos ou le traitement d’images en temps réel.

Pendant la création de contenus, les SSD locaux offrent les vitesses de lecture/écriture élevées nécessaires au traitement en temps réel. Que l’IA génère de nouvelles images, de nouvelles vidéos ou de nouveaux textes, les SSD permettent au système de gérer des opérations d’E/S fréquentes à vitesse élevée sans goulot d’étranglement, garantissant ainsi la production rapide des contenus.

Stockage : Les principaux acteurs du stockage lors de cette étape de création sont la mémoire HBM, la mémoire DRAM et les SSD locaux.

Étape 4 : stockage des contenus.

Lors de la phase de stockage des contenus, les nouvelles données sont enregistrées à des fins d’affinage continu, d’assurance qualité et de conformité.

Calcul : Bien qu’ils ne soient pas directement impliqués dans le stockage à long terme, les processeurs graphiques et les processeurs centraux peuvent participer à la compression ou au chiffrement des données lors de leur préparation en vue du stockage. Ils sont capables de traiter rapidement de gros volumes de données, ce qui signifie que les contenus sont prêts à être archivés sans délai. La mémoire sert de cache temporaire avant le transfert des données vers un emplacement de stockage à long terme. La mémoire DRAM accélère les opérations d’écriture et permet d’enregistrer rapidement et efficacement les contenus générés par l’IA. Ce point est particulièrement important pour les applications d’IA en temps réel, où des retards dans le stockage des données sont susceptibles d’entraîner des goulots d’étranglement.

Stockage : La phase de stockage des contenus repose à la fois sur les SSD en réseau et les disques durs en réseau, qui enregistrent les données pour un affinage continu, l'assurance qualité et la conformité. Les SSD en réseau offrent un niveau de données adapté à la vitesse et sont utilisés pour le stockage à court terme et ultrarapide des contenus générés par l'IA. En raison de leur capacité moindre par rapport aux disques durs, les SSD stockent généralement les contenus fréquemment utilisés ou ceux qui doivent être immédiatement disponibles pour édition ou affinage.

Le processus d’itération génère de nouvelles données validées qui doivent être stockées. Une fois enregistrées, ces données continuent d’être affinées et font l’objet de contrôles permanents à des fins d’assurance qualité et de conformité. Les disques durs stockent et protègent les versions répliquées des contenus créés et fournissent la capacité nécessaire pour stocker les contenus générés par les processus d’IA. Ils sont particulièrement adaptés à cela, car ils offrent une grande capacité de stockage à un coût relativement faible par rapport à d’autres solutions de stockage telles que les SSD.

Étape 5 : conservation des données.

Lors de la phase de conservation des données, les ensembles de données répliqués sont conservés dans les différentes régions et les différents environnements. Les ressources de stockage sont généralement utilisées à cette étape.

Stockage : Les données stockées constituent l'ossature d'une IA digne de confiance, qui permet aux experts en mégadonnées de s'assurer que les modèles fonctionnent comme prévu. Les SSD en réseau permettent de raccorder les disques durs à la couche SSD locale et facilitent la circulation des données au sein de l’écosystème.

Les disques durs ont pour fonction essentielle de stocker et de protéger les données à long terme. En effet, ils permettent de conserver les résultats de la création des contenus d’IA, en stockant de manière sécurisée les contenus générés afin qu’ils soient accessibles en cas de besoin. Ils offrent également l’évolutivité nécessaire pour gérer efficacement des volumes de données croissants.

Étape 6 : réutilisation des données.

Enfin, lors de l'étape de réutilisation des données, les données source, d'entraînement et d'inférence sont appliquées à l'itération suivante du flux de travail.

Calcul : Les processeurs graphiques jouent un rôle important dans la phase de réutilisation des données en réexécutant des modèles à partir d’ensembles de données archivés de façon à obtenir de nouvelles inférences ou un entraînement supplémentaire, ce qui permet de relancer le cycle des données de l’IA. Ils sont capables d’effectuer des calculs parallèles sur de grands ensembles de données, ce qui permet aux systèmes d’IA d’améliorer en permanence la précision des modèles pour un investissement en temps minimal. Les processeurs centraux interrogent et récupèrent les données stockées à des fins de réutilisation. Ils filtrent et traitent efficacement les données d’historique, en réintégrant des ensembles pertinents dans les modèles d’entraînement. Dans les systèmes d’IA à grande échelle, les processeurs effectuent souvent ces tâches tout en gérant les interactions entre les systèmes de stockage et les clusters de calcul.

Lorsque des données d’historique sont récupérées pour être réutilisées dans une nouvelle itération de l’analyse du modèle d’IA, la mémoire garantit un accès rapide aux grands ensembles de données. La mémoire HBM permet de charger rapidement les ensembles de données dans la mémoire des processeurs graphiques, où ils peuvent être immédiatement utilisés pour un nouvel entraînement ou une inférence en temps réel.

Stockage : Les résultats sont réinjectés dans le modèle, ce qui améliore la précision et permet de créer de nouveaux modèles. Les SSD et les disques durs en réseau permettent de créer des données d’IA dispersées géographiquement. Les ensembles de données bruts et les résultats sont utilisés en tant que sources pour de nouveaux flux. Les SSD accélèrent la récupération des données préalablement stockées. Leur accès à faible latence permet une réintégration rapide de ces données dans les flux d’IA, réduisant ainsi les temps d’attente et augmentant l’efficacité globale du système. Les disques durs remplissent les exigences de stockage en masse de la phase de réutilisation des données d’IA, et permettent ainsi la mise en œuvre de l’itération suivante du modèle à un coût raisonnable.

Le stockage constitue l’ossature d’une IA fiable.

Nous l’avons vu, les flux d’IA ont besoin de processeurs très performants, ainsi que de solutions de stockage de données. La mémoire des appareils et les SSD ont toute leur place dans les applications d’IA en raison de leurs performances ultrarapides, qui permettent une inférence rapide. Mais nous aimons à penser que les disques durs constituent l’ossature de l’IA Ils sont essentiels en raison de leur évolutivité économique, indispensable pour de nombreux flux d’IA.

Les disques durs Seagate dotés de la technologie Mozaic 3+™, mise en œuvre unique de la technologie HAMR (enregistrement magnétique assisté par laser), constituent un choix évident pour les applications d'IA en raison de leurs avantages en termes de densité surfacique, d'efficacité et d'optimisation de l'espace. Ils offrent une densité surfacique sans précédent, avec plus de 3 To par plateau. Ils sont actuellement disponibles dans des capacités commençant à 30 To et expédiés en volume aux clients à la recherche d’une solution ultra-évolutive. Seagate teste déjà la plate-forme Mozaic qui permettra d’atteindre une capacité supérieure à 4 et 5 To par plateau.

Par rapport à la génération actuelle de disques durs dotés de la technologie d’enregistrement magnétique perpendiculaire, les disques durs Mozaic 3+ consomment quatre fois moins en fonctionnement et émettent dix fois moins de carbone incorporé par téraoctet.

Pour les charges de travail d’IA, le calcul et le stockage vont de pair. La mémoire et le traitement orientés calcul, ainsi que des SSD hautes performances, sont essentiels pour les applications d’IA. Les solutions de stockage de données à capacité élevée évolutives le sont également, et les disques durs de Seagate s’affirment comme des précurseurs en la matière.