À mesure que l’IA s’intègre dans un nombre croissant de secteurs et d’utilisations, son efficacité repose de plus en plus sur la fiabilité de ses résultats. Une IA fiable constitue aujourd’hui un atout prisé.

La conception d’une IA digne de confiance repose sur des éléments clés qui garantissent la fiabilité des données et des résultats. Cet article explore les rôles de la transparence, de la lignée des données, de l’explicabilité, de la responsabilité et de la sécurité dans la création de systèmes d’IA fiables. Chacun de ces éléments favorise l’intégrité et la fiabilité des données, qui sont essentielles à la réussite de l’IA. Pour leur part, les disques durs fournissent l’ossature de stockage nécessaire pour bénéficier de ces avantages de façon continue.

Une IA digne de confiance est composée de flux de données d’IA qui utilisent des entrées fiables et génèrent des informations fiables. Une IA de confiance repose sur des données conformes aux critères suivants :

- qualité et précision optimales,

- légalité, propriété et provenance,

- stockage sécurisé et protection,

- transformations explicables et traçables par l’algorithme,

- résultats cohérents et fiables du traitement des données.

L’infrastructure de stockage évolutive favorise une IA digne de confiance, ce qui permet de gérer, de stocker et de sécuriser de façon appropriée les grands volumes de données utilisés par les systèmes d’IA.

Une IA digne de confiance dans les grands centres de données.

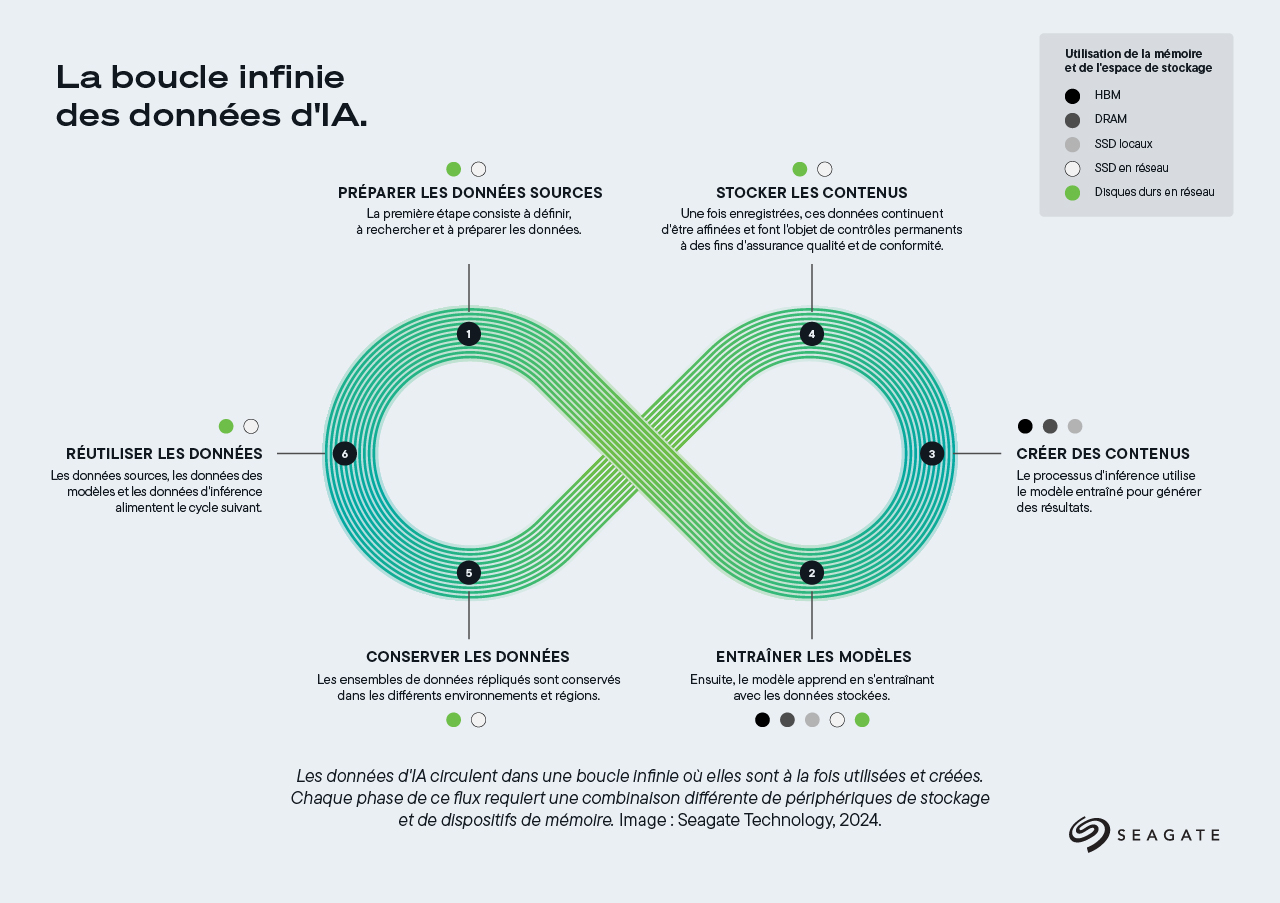

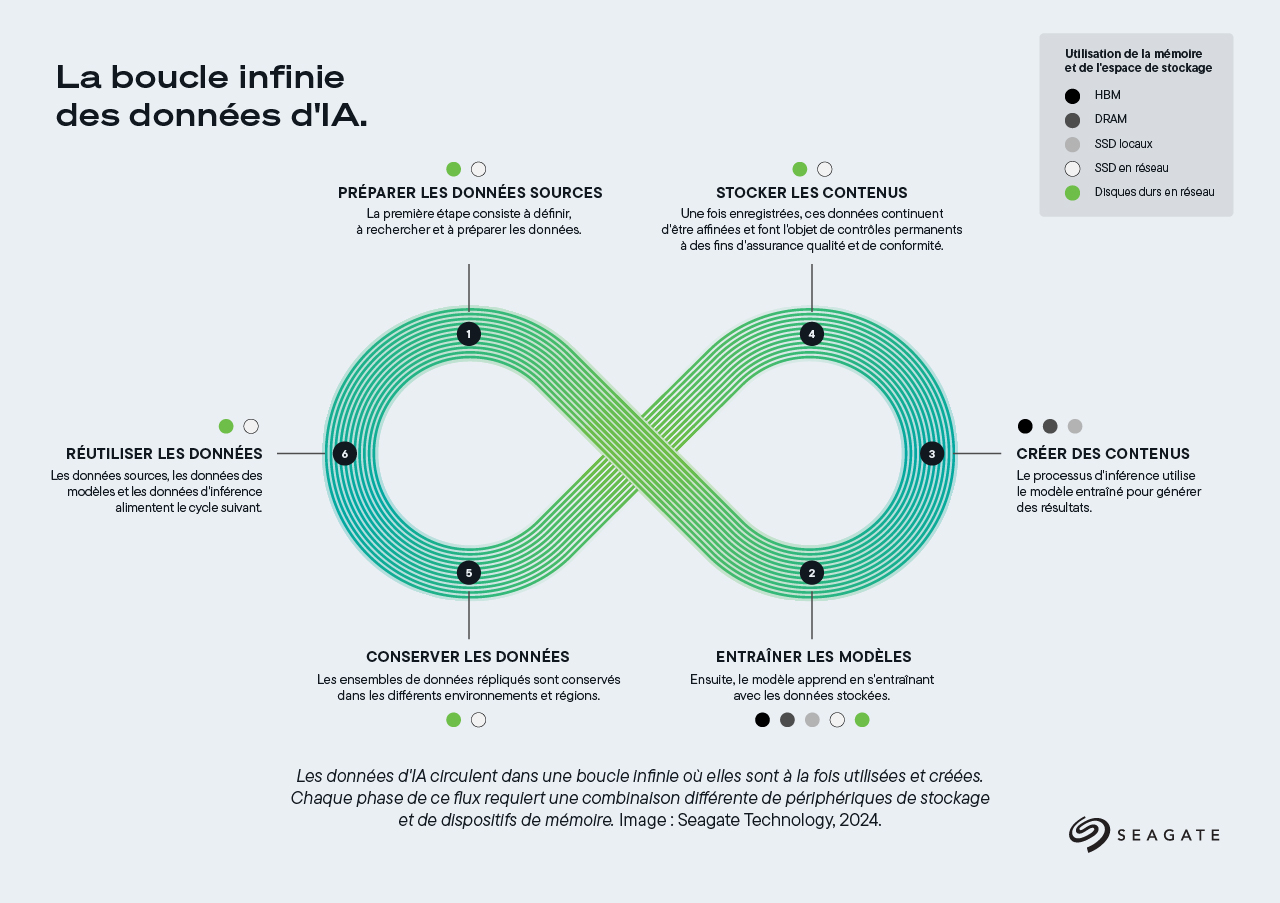

Les processus d’IA impliquent d’énormes volumes de données qui nécessitent une infrastructure robuste pour une gestion efficace. Pour gérer ces ensembles de données massifs, les centres de données qui gèrent des charges de travail d’IA sont équipés de clusters de stockage évolutifs intégrant des magasins d’objets et des lacs de données. Cette infrastructure soutient l’ensemble de la boucle des données de l’IA, de l’approvisionnement des données brutes à la préservation des résultats des modèles en vue d’une utilisation future.

Sans l’évolutivité et l’efficacité des centres de données, le potentiel de l’IA resterait limité, car la capacité à stocker et à récupérer des ensembles de données volumineux est indispensable à la réussite de l’IA.

Les architectures optimisées pour l’IA modernes impliquent une intégration équilibrée de couches de calcul, de stockage et de réseau. Les lacs de données et les magasins d’objets, qui utilisent souvent plusieurs niveaux de stockage, constituent la base des environnements d’IA et offrent des capacités de calcul très performantes à grande échelle. L’infrastructure de stockage est essentielle pour garantir que les systèmes d’IA ont accès à la fois aux données nécessitant un accès immédiat et aux données archivées. Les architectures prévues pour l’IA sont conçues pour offrir une évolutivité massive. C’est grâce à l’équilibre entre capacité de stockage et performances que les systèmes d’IA peuvent fonctionner efficacement et évoluer en fonction de la demande.

Les éléments clés d’une IA digne de confiance.

Cependant, des architectures évolutives ne sont pas suffisantes. Une IA digne de confiance doit également intégrer des composants favorisant la fiabilité : transparence, lignée des données, explicabilité, responsabilité et sécurité. Voyons comment ces éléments contribuent à l’intégrité des flux d’IA.

Transparence : rendre l’IA compréhensible.

La transparence à grande échelle est la clé d’une IA digne de confiance. Elle garantit que les décisions prises par les systèmes d’IA sont compréhensibles, accessibles, réplicables et corrigibles. Lorsqu’un système d’IA recommande un film, par exemple, la transparence aide les utilisateurs à comprendre la logique de la suggestion, en constatant qu’elle repose sur des données claires, telles que l’historique de visionnage et les préférences de l’utilisateur.

Dans les centres de données, des clusters de stockage évolutifs favorisent la transparence en offrant des enregistrements clairs de chaque point de décision du cycle de vie des données d’IA. Grâce à ces infrastructures, les organisations peuvent suivre les données de leur origine au résultat final, en passant par le traitement, et ainsi favoriser une plus grande responsabilité.

La transparence est essentielle dans de nombreux secteurs. Voici trois exemples :

- Dans le secteur de la santé, des algorithmes d’IA analysent les images médicales, contribuant à une détection précoce des maladies. Plus les données sont transparentes, plus les diagnostics sont précis.

- Dans le domaine de la finance, les algorithmes des transactions traitent les données du marché rapidement et les filtrent plus efficacement, ce qui permet aux gestionnaires de portefeuille de mieux comprendre et d’optimiser leurs stratégies d’investissement. Des données fiables contribuent à améliorer le retour sur investissement.

- Dans le cadre du traitement automatique des langues (TAL), les chatbots répondent aux demandes des clients. Une lignée de données transparente permet aux entreprises de rester responsables.

La transparence favorise une plus grande responsabilité grâce à la clarification des données, des décisions et des résultats des modèles.

Lignée des données : suivi des sources de données et de leur utilisation.

La lignée des données désigne la capacité à identifier l’origine (la provenance) et l’utilisation des ensembles de données tout au long du processus d’IA, ce qui est essentiel pour comprendre comment les modèles parviennent aux décisions prises.

Par exemple, dans les applications d’IA du secteur de la santé, la lignée des données permet de savoir quels ensembles de données ont été utilisés pour établir des diagnostics, ce qui permet de connaître les sources d’informations.

En offrant une vue claire du cycle des données, de leur entrée à leur sortie, elle permet aux organisations de vérifier l’origine et l’utilisation des ensembles de données, et ainsi de s’assurer que les modèles d’IA reposent sur des données exactes. En suivant les données à chaque étape du processus, la lignée des données permet une vérification complète des systèmes d’IA, et contribue à la fois à la conformité réglementaire et à la responsabilité interne. Les disques durs facilitent la lignée des données en stockant de manière sécurisée chaque transformation, ce qui permet aux développeurs d’examiner l’historique de ces données et de connaître l’ensemble des processus de prise de décision de l’IA.

Explicabilité : clarifier la prise de décision de l’IA.

L’explicabilité garantit que les décisions de l’IA sont compréhensibles et fondées sur des données qui peuvent être suivies et évaluées. Cet aspect est particulièrement important dans des secteurs à fort enjeu, tels que la santé ou la finance, où la compréhension du raisonnement qui sous-tend les décisions de l’IA peut avoir une incidence sur des vies ou des investissements. En conservant des points de contrôle, les disques durs permettent aux développeurs de revenir sur les différentes étapes de développement des modèles et d’évaluer ainsi en quoi les modifications apportées aux données ou aux configurations ont une incidence sur les résultats. Grâce à cette approche, les systèmes d’IA deviennent plus transparents et compréhensibles, et renforcent leur fiabilité et leur convivialité.

Responsabilité : permettre la vérification des flux d’IA.

En matière d’IA, la responsabilité garantit que les modèles peuvent être examinés et vérifiés par des parties prenantes. Grâce aux points de contrôle et à la lignée des données, les disques durs fournissent une piste de vérification qui documente le développement de l’IA, de l’entrée à la sortie des données, ce qui permet aux organisations d’étudier les facteurs contribuant aux décisions générées par l’IA. Cette piste de vérification aide les organisations à respecter les normes réglementaires et garantit aux utilisateurs que les systèmes d’IA reposent sur des processus fiables et reproductibles. La responsabilité permet d’identifier les points de contrôle spécifiques où des décisions ont été prises, et ainsi de tenir les systèmes d’IA responsables de leurs actions.

Sécurité : protéger l’intégrité des données.

La sécurité renforce la fiabilité de l’IA en protégeant les données contre tout accès non autorisé et toute falsification. Des solutions de stockage sécurisées, qui incluent un chiffrement et des contrôles d’intégrité, garantissent que les modèles d’IA reposent sur des données authentiques et non modifiées. Les disques durs contribuent à la sécurité en conservant les données dans un environnement stable et contrôlé, ce qui permet aux organisations de lutter contre la falsification et de se conformer à des réglementations de sécurité strictes. En sécurisant les données à chaque étape du processus d’IA, les entreprises peuvent compter sur l’intégrité des flux d’IA.

Mécanismes permettant d’exploiter une IA digne de confiance.

L’obtention de ces éléments d’une IA digne de confiance repose sur des mécanismes solides qui garantissent l’intégrité, la sécurité et la responsabilité des données. Des points de contrôle et politiques de gouvernance aux systèmes de hachage et de stockage de masse, ces outils garantissent que les systèmes d’IA respectent les normes élevées nécessaires à une prise de décision fiable. Nous verrons ci-dessous comment ces mécanismes soutiennent une IA digne de confiance.

Création de points de contrôle : prendre en charge plusieurs éléments.

La création de points de contrôle consiste à sauvegarder l’état d’un modèle d’IA à de courts intervalles spécifiques au cours de son entraînement. Les modèles d’IA sont entraînés à traiter de vastes ensembles de données par le biais de processus itératifs qui peuvent durer de quelques minutes à plusieurs jours.

Les points de contrôle agissent comme des instantanés de l’état actuel du modèle (données, paramètres et réglages) à de nombreux moments de l’entraînement. Enregistrés sur des périphériques de stockage toutes les minutes, ou toutes les quelques minutes, ces instantanés permettent aux développeurs de garder une trace de la progression du modèle et d’éviter de perdre un travail précieux en cas d’interruptions inattendues.

Les points de contrôle garantissent une IA digne de confiance en remplissant plusieurs objectifs essentiels :

Protection en cas d’interruption de l’alimentation. Les points de contrôle protègent les tâches d’entraînement contre les pannes système, les coupures de courant et les plantages, ce qui permet de reprendre les modèles à partir du dernier état enregistré sans avoir à recommencer à partir de zéro.

Amélioration et optimisation des modèles. En enregistrant des points de contrôle, les développeurs peuvent analyser les états passés, ajuster les paramètres des modèles et améliorer les performances dans le temps.

Respect des lois et protection de la propriété intellectuelle. Les points de contrôle fournissent une trace transparente qui aide les organisations à se conformer aux cadres légaux et à protéger des méthodologies propriétaires.

Gagner la confiance et garantir la transparence. La création de points de contrôle fournit un enregistrement de l’état du modèle, ce qui rend les décisions de l’IA plus traçables et compréhensibles, favorisant ainsi l’explicabilité.

Politiques de gouvernance : guider une gestion responsable des données.

Les politiques de gouvernance définissent le cadre dans lequel les données sont gérées, protégées et utilisées tout au long du cycle de vie de l’IA. Ces règles garantissent la conformité des systèmes d’IA avec les réglementations et les normes internes, ce qui permet de bénéficier d’un environnement de traitement des données sécurisé et éthique. Les politiques de gouvernance définissent des contrôles d’accès, des calendriers de conservation des données et des procédures de conformité, favorisant ainsi la sécurité et la responsabilité au sein des flux d’IA. En définissant ces normes, les organisations s’assurent que leurs systèmes d’IA sont transparents, fiables et basés sur des principes sains de gestion des données.

Hachage : sécuriser la lignée des données

Le hachage joue un rôle central dans la préservation de la lignée des données en créant des empreintes numériques uniques pour les données. Ces empreintes permettent aux organisations de vérifier que les données n’ont été ni modifiées ni falsifiées, à aucun moment du processus d’IA. En hachant les ensembles de données et les points de contrôle, les systèmes d’IA garantissent la cohérence et l’intégrité des entrées de données, ce qui renforce la sécurité et contribue à la transparence. Les disques durs stockent ces enregistrements de données hachés, et permettent ainsi aux organisations de vérifier l’authenticité des données et de pouvoir compter sur l’intégrité des flux d’IA.

Systèmes de stockage à capacité élevée : permettre une conservation des données sécurisée et évolutive.

Les systèmes de stockage en masse, notamment ceux qui s’appuient sur des disques durs, fournissent la capacité de base nécessaire pour stocker et gérer les grands volumes de données essentiels à une IA digne de confiance.

Les disques durs offrent un stockage évolutif et économique qui garantit aux systèmes d’IA un accès à la fois aux données actuelles et aux données archivées. Ces systèmes favorisent la transparence en conservant des enregistrements accessibles, l’explicabilité en protégeant les données dans le temps, et la sécurité en fournissant des environnements stables pour le stockage des données.

Les disques durs Seagate de la série Exos®, qui intègrent la technologie Mozaic 3+™, ont été conçus spécifiquement pour ce type de prise en charge. Ils stockent les ensembles de données bruts qui alimentent des modèles d’IA, des enregistrements détaillés des processus de création de données, des points de contrôle itératifs durant l’entraînement des modèles et les résultats d’analyses d’IA.

Les disques durs jouent un rôle essentiel dans cette transparence, en stockant de vastes ensembles de données et d’autres informations essentielles sur lesquelles s’appuient les modèles d’IA. Ces données sont facilement accessibles grâce à une combinaison de disques durs en réseau pour une conservation à long terme et de SSD pour un accès immédiat, ce qui permet aux organisations de garder une trace de chaque point de décision du cycle de vie de l’IA.

L’enregistrement précis sur disque dur permet de garantir la conformité réglementaire, d’améliorer l’explicabilité, d’affiner les modèles et d’encourager une plus grande responsabilité. Les disques durs enregistrent le cycle de vie complet des données et permettent de disposer d’enregistrements clairs et traçables pouvant être consultés pour vérifier la conformité avec les réglementations et politiques.

Les volumes de données augmentent dans plusieurs domaines. Dans le secteur de la santé, des domaines tels que la recherche génomique et l’imagerie médicale génèrent des pétaoctets de données chaque année. Les appareils IoT, notamment les capteurs et les gadgets connectés, ainsi que l’explosion des contenus générés par les utilisateurs sur les réseaux sociaux, contribuent de manière significative à cet afflux de données.

Les disques durs apparaissent comme une solution de stockage économique et évolutive. Ils offrent une grande capacité de stockage au coût par téraoctet le plus faible (rapport de 6:1 par rapport au stockage flash), ce qui en fait un choix optimal pour la conservation des données à long terme. Les disques durs constituent donc la solution de stockage incontournable pour les ensembles de données bruts volumineux utilisés pour le traitement et le stockage des résultats d’analyse de l’IA. En plus d’assurer le stockage à long terme des entrées et sorties, les disques durs sont également en mesure de gérer les flux d’IA lors de la phase d’entraînement à forte sollicitation en calcul. Ils assurent en effet le suivi des points de contrôle et l’enregistrement des diverses itérations des contenus.

Conclusion.

La transparence, la lignée de données, l’explicabilité, la responsabilité et la sécurité constituent la voie d’accès vers une IA fiable. Ces éléments permettent aux organisations de transformer de simples données en éléments d’innovation fiables.

La transparence, la lignée de données, l’explicabilité, la responsabilité et la sécurité constituent la voie d’accès vers une IA fiable. Ces éléments permettent aux organisations de transformer de simples données en éléments d’innovation fiables.

En gérant l’ensemble du flux de données de l’IA, de la capture des données brutes à la conservation des points de contrôle et à la préservation des résultats d’analyse, les disques durs jouent un rôle essentiel dans la validation, l’ajustement et la fiabilité des modèles d’IA à long terme. En s’appuyant sur des disques durs pour la conservation des données à long terme, les développeurs de systèmes d’IA peuvent revenir sur des entraînements antérieurs, analyser des résultats et ajuster des modèles pour gagner en efficacité et en précision.

À mesure que l’IA se généralise sur différents secteurs, il devient essentiel de préserver la lignée des données, de respecter les normes réglementaires et d’établir une communication claire avec toutes les parties prenantes. Les ingénieurs de Seagate ont créé des disques durs afin qu’ils constituent les solutions de stockage évolutives et économiques nécessaires à cette évolution. Les développeurs de systèmes d’IA peuvent ainsi concevoir des systèmes intelligents et dignes de confiance.